This week is the first week of remote sensing which means this is also the week of my last class for the graduate certificate in GIS. Being the first week, this served as a introduction to different ways we can look at imagery to glean information from it.

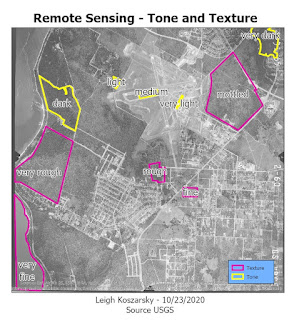

For the first part, we went over tone and texture. Tone is the lightness or darkness of an area which ranges from very light to very dark. Texture is the coarseness of the of an area which ranges from very fine (like a still pond of water) to very coarse (an irregular forest). These two elements lay down the building blocks of being able to identify elements of imagery photos.

|

| Areas of different tone and texture isolated to show the variance. |

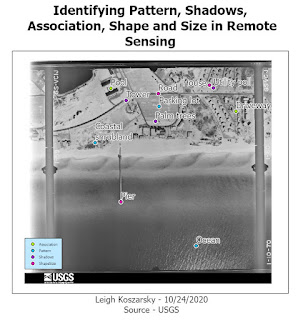

In the second part of the lab, we explored other ways identify features. Shape and size is the most basic of these. By looking at the shape of something and comparing the size of what is around it you are able to determine what it is. Pattern is good for noticing things like a parking lot through the paved lines for cars or the rows of crops in the field. I looked at the shadows of things that were tall and narrow that are difficult to decipher what they are when looking directly above. This technique is especially useful for things like trees or towers. Association is where you look at the elements relative to one element to determine what it is.

|

| Here I used different aspects such as shape and size, pattern, association and shadows to identify different elements within imagery. |

In the last part of the lab we compared true color imagery against false color infrared (IR). True color imagery is the same as what the human eye would typically see. False color IR changes the way things are colored in the image. The most obvious differences are that water becomes very dark and vegetation becomes red. This false color imagery makes it more obvious to discern what certain elements of an image are.